Background

I had been running an old PC purchased from the university surplus and salvage as a makeshift server for some time. During this time I had ran a multitude of minecraft servers on it for my friends. When asked about when the next server would be hosted I took that as an opportunity to upgrade my setup.

The Machine

I had decided that I wanted to get my hands on some enterprise grade hardware for the new server and took to reddits r/homelab to begin research. I found lots of users recomending the Dell Poweredge r720 as a good home lab server. I then took to ebay to see what deals I could find and ended up settling on a Poweredge r520 that came with 2 Xeons, 128gb of memory, and space for 8 drives.

Proxmox as a Hypervisor

With the arrival of the new hardware I was ready to get everything setup. I had decided that I wanted to try using a hypervisor as opposed to my last server where I had just ran Ubuntu on bare metal. The server started right up and after messing with the BIOS settings I was able to get access to the iDRAC lifecycle controller from my local machine. I used the web interface to “deploy” proxmox on the system. At this point everything was going smoothly, but that wouldn’t last for long.

Storage Issues

In anticipation of the new server I had purchased an old 8TB SAS driver from ebay to be the main storage for this server. I have a large collection of media that I host on a plex instance and needed at least 1.5TB just for that media. At first I had not noticed that the drive was SAS and not SATA when I purchased it, but after doing some research on the r520 I came to the conclusion that it was supported and thus did not matter. I was mistaken. My spec of the r520 came with the s110 PERC, this was actually a software raid controller as opposed to the other options available that were hardware RAID cards. To my surprise the s110 did not support the SAS protocol. I opened up the machine and found that there was one cable running from the storage array at the front of the machine to a header labled “SATA1” on the motherboard. Right next to that header was one labled “SAS1.” I tried moving the cable to that port for the front panel display to throw an error stating that there was an issue with the drive cable. Turns out while SATA and SAS may use the same port the cables are not interchangable. At this point I just wanted to get the server spun up and deal with all this later. I ran out to the store and grabbed the first SATA hard disk I could find and threw that in there for now.

LXC Containers

Proxmox uses the LXC protocol for containers it deploys. I had already decided to use the container feature over the VM one due to their lower footprint and quicker spin up times. After some research into how these contianers work I was up and running with an Ubuntu container in no time. With this new container I split the prealloced LVM slim partition into 2 parts and used one of them to copy all the media from my previous plex instance to the new machine. I then used the Ubuntu contianer to install and run plex. From this point onward I had a good feel for how the contianers operated and spun up a couple of other Ubuntu instances for various things I had been hosting on my previous server.

Returning to Storage Issues

With the server operational I was able to shuck the drives from my old server and add them to the storage array to get my total storage up to 4TBs. This was still lack luster and I really wanted to make my SAS drive work. I took to reading the service manuals for the r520 and discovered that while I did not have a hardware RAID card my motherboard had a slot for it and that is why it had the SAS1 port to allow passthrough to the PERC. I went to ebay and found that I could get a h310 mini PERC card for fairly cheap and it should (fingers crossed) work in my machine. I also ordered a SAS cable thanks to the insight gained during my earlier experiments.

Flashing the PERC

When the h310 mini arrived I slotted it into the motherboard and ran the SAS cable from the storage array to the motherboard. I discovered that there were 2 ports on the storage array, one for the left 4 bays and one for the right 4. I decided to keep the SATA cable for the left 4 that I had already populated with SATA drives and installed the SAS cable just for the right 4. At this point I slotted my drive in and fired up the server to see what progress had been made. SUCCESS the server detected the RAID card. However, when entering the configuration menu for it I could see that it detected the drive but it was giving an error about how the drive was locked. Back to google to find the source of the issue. It turned out that I had purchased a 4kn sector size drive that was not compatible with the current firware version on the PERC due to the fact that standard did not even exist when the firmware was released. Further research led me to discover that you could flash the RAID card into something called “IT Mode” that allowed it to operate without the restrictions placed on it by Dell. This was due to the fact that the h310 was actually another RAID card that Dell had rebranded for their servers. Following this guide I was able to flash the card and it finally allowed me to pass my drive through to proxmox as a non-RAID card.

4kn Strikes Again

With access to the drive I tried to used fdisk to format it just to recive an odd error that was unhelpful to the root cause of the problem. Countless hours of fourm reading later and I discovered that the issue was the sector size once again. I discovered a tool called sg3_utils that would allow me to reformat the entire drive changing the sector size. After reading up on the documentation I started the formatting job. It ended up taking about 26 hours and during that time the storage bus was very slow to access all other drives in the system so I decided to let it go and come back later. Upon returning I found the format completed successful and I could now partition and mount the drive. After this I copied all my media to this new driver, redid the bind mounts for my containers to point to the new drive and was on my way.

The Minecraft Server Begins

With acces to this new powerful hardware I was ready to setup the minecraft server. I decided to give folia a try for the server side hosting due to its ability to multi-thread. Despite is being in alpha I was not discouraged and compiled the jar from source since the papermc team did not publicly release the jar as they did with their other projects. At first I attempted to use a web panel for server managment but after slow load times and general unresponsivness I decided to just do the managing myself. With a new user created in the unprivliaged container I was running for the server I created a tmux session that would server as the server console. I ran the folia instance and began setting up scripts for the server management. I created the basic start script making use of Aikar’s Flags for my jvm args and proceded to setup a cron job to take care of daily server restarts. At this point I was read to let my friends have at it and decided I would problem solve during the launch.

Folia, Definitly in Alpha

The server started out fine but eventually ran into some issues that were causing player to be randomly kicked due to “KeepAliveTimeout.” From my research it seems that the server sends a packet to each host to make sure it is still connect at regular intervals and it puts a time limit on how long it has to respond before it terminated the connection as it assumes it is dead. It turns out something in the way folia works was hogging all the processing power and causing the server to be unable to accept these packets before the timeout was reached. After much trouble shooting I decided to return to regular paper for the server hosting.

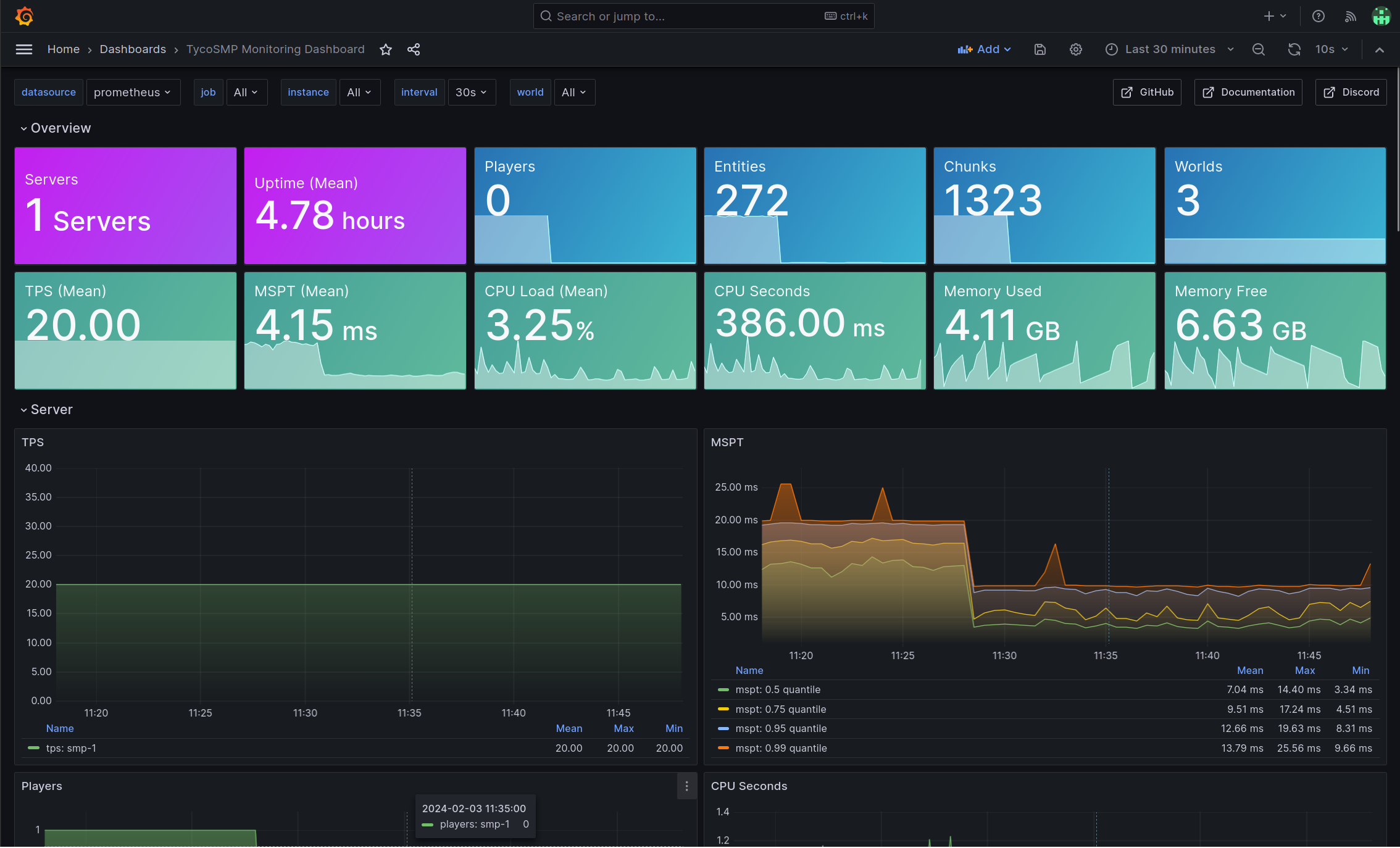

UnifiedMetrics

With the server in a more stable state My friend who was helping me host the server and I decided to set up some way to monitor server metrics. We stumbled upon UnifiedMetrics and decided to give it a shot. We got everything setup without much issue and used Grafana as our front end.

With the server in a stable state I was ready to let it ride.

With the server in a stable state I was ready to let it ride.